A Novel Explanatory Tabular Neural Network to Predicting Traffic Incident Duration Using Traffic Safety Big Data

Abstract

1. Introduction

| Research Approach | Typical Methods | Description |

|---|---|---|

| Statistical Approach | Hazard-based models [14,15,16,17,18], Quantile regression [22,23], Copula-based approach [19], and Finite mixture models [20,21] | Statistical methods attempt to establish models to explain various influencing factors. The main differences lie in the model construction and the choice of parameter estimation methods. |

| Machine Learning Approach | Distance Metric Learning (1) KNN [15] (2) SVM [24] | KNN and SVM both use distance metrics to measure the similarity between instances. The goal of KNN is to find the optimal classifier by minimizing the classification error, while SVM aims to find the hyperplane that maximizes the margin between classes for better classification performance. |

| Ensemble Learning (1) RF [27,28] (2) GBDT [34] | RF and gradient boost decision tree (GBDT) both combine multiple decision trees to make predictions. Specifically, RF builds independent trees through random feature selection and voting, while GBDT sequentially builds trees to correct the residuals of previous trees. | |

| Neural Network Learning (1) Bayesian Neural Network [31] (2) ANN [25] | Neural Network Learning utilizes network architectures for predicting incident duration. Bayesian neural networks incorporate Bayesian inference techniques to model and quantify uncertainty in the network’s predictions, while ANN typically focuses on optimizing network weights through backpropagation without explicitly considering uncertainty. |

2. Model Principals

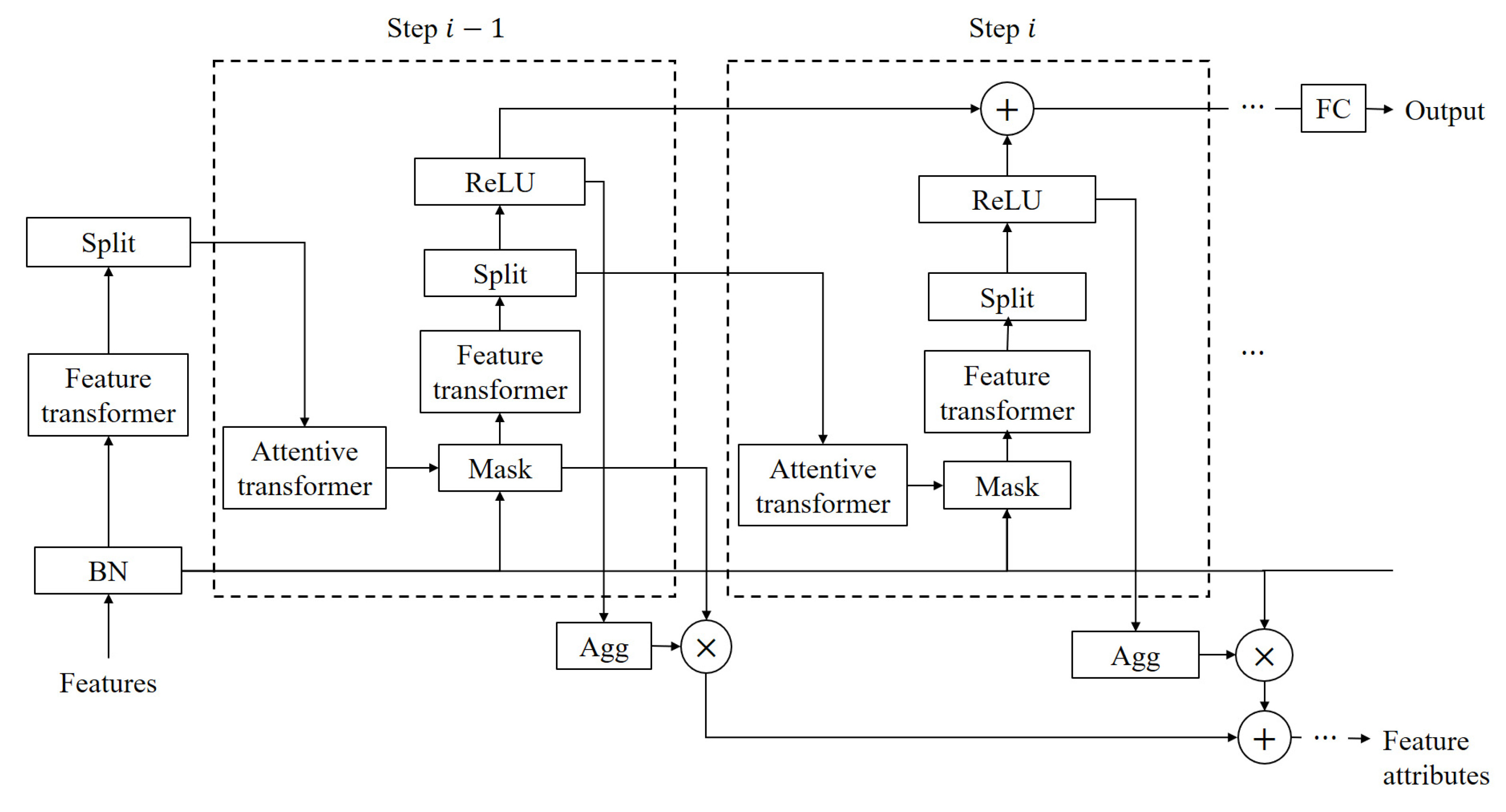

2.1. Structure of TabNet Model

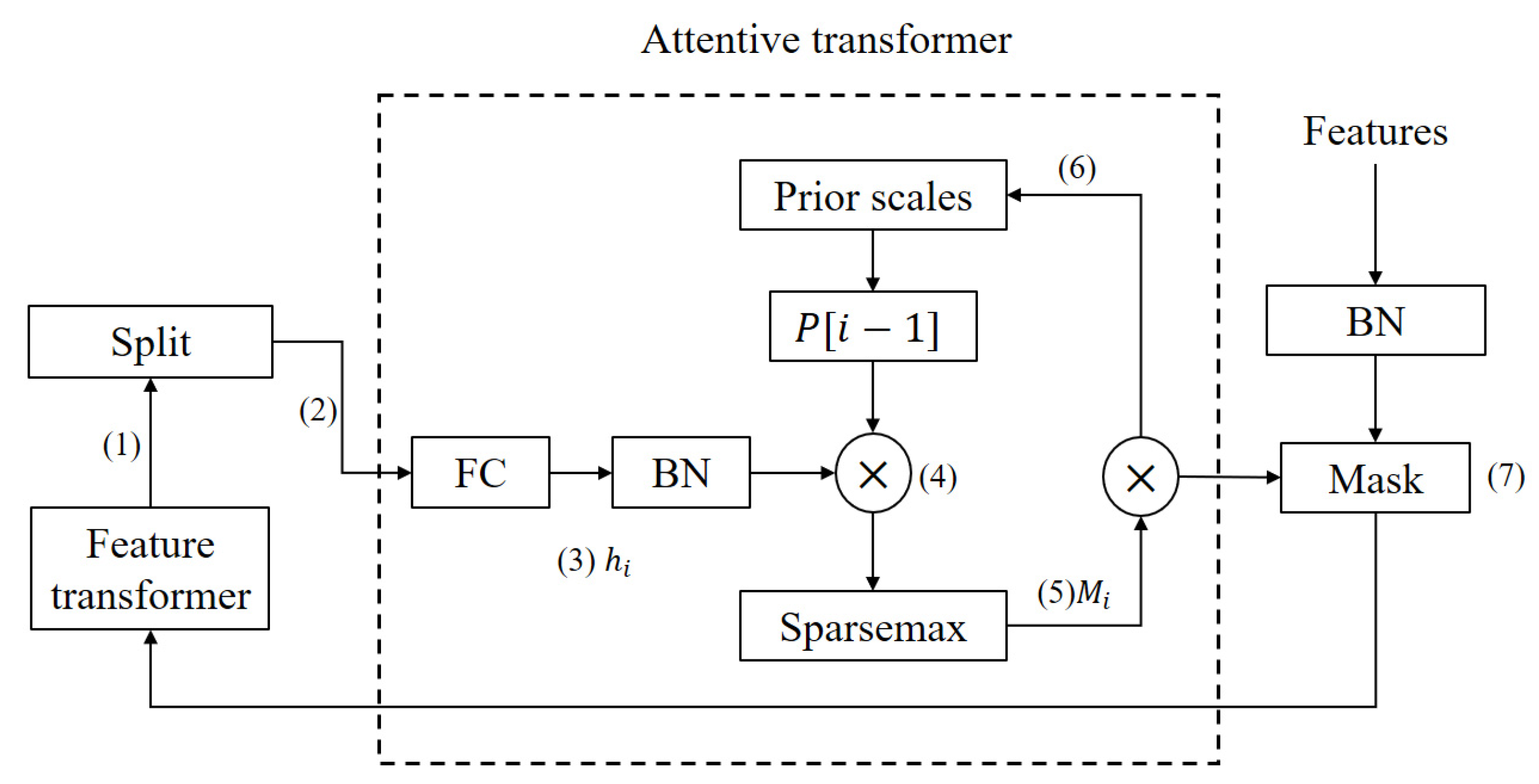

2.1.1. Feature Selection

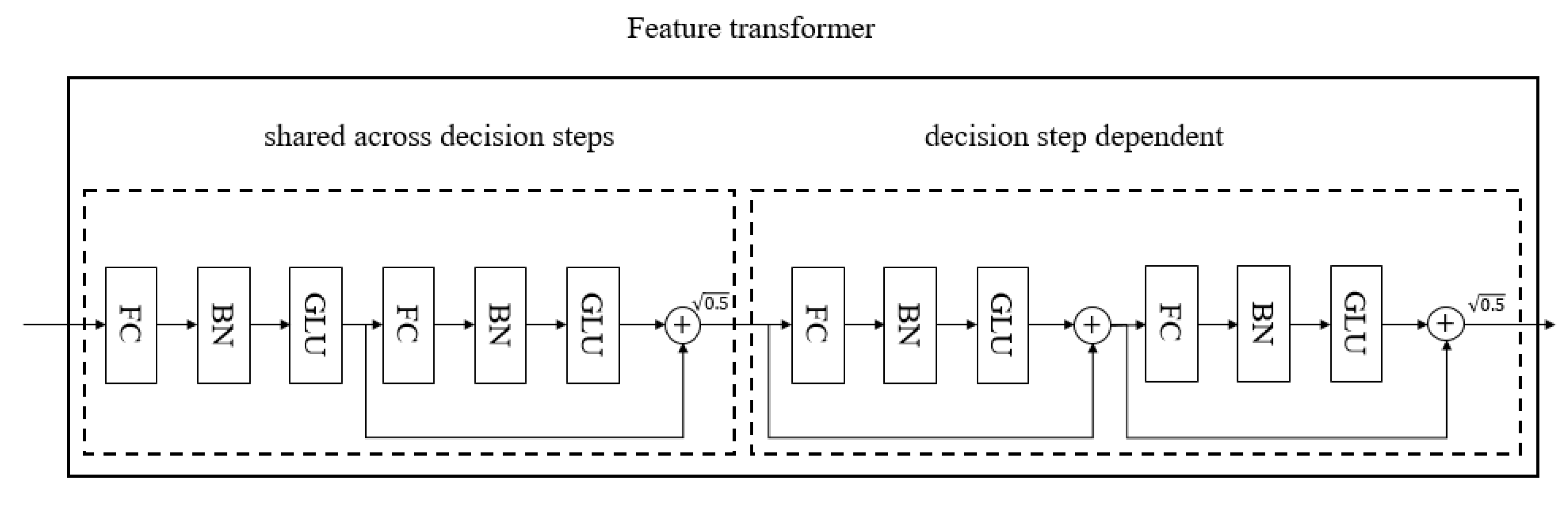

2.1.2. Feature Processing

- (1)

- Shared blocks: The weights of the shared blocks are shared across all decision steps. This means that the transformations applied in the shared blocks are identical for every decision step. Shared blocks are designed to extract common patterns from the input features, which are useful across all decision steps, aiding in model generalization and reducing the number of parameters.

- (2)

- Independent blocks: Conversely, the independent blocks have weights that are independent for each decision step. This means that for each decision step, the transformations applied in these blocks can be different. Independent blocks allow each decision step to learn and extract different features or representations from the transformed output of the shared blocks. This design supports the model’s ability to capture complex interactions and relationships.

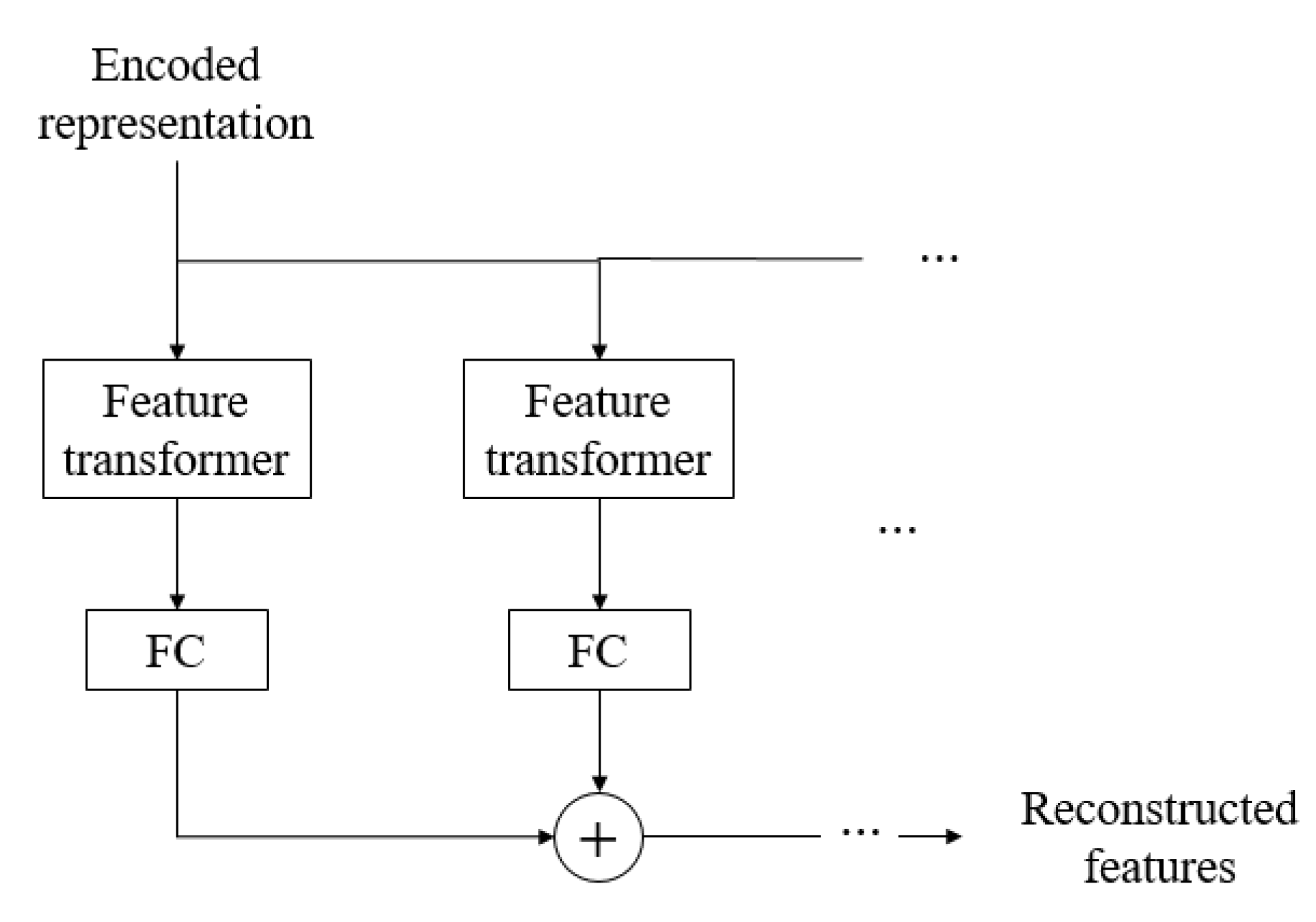

2.1.3. TabNet Decoder Architecture

2.2. Interpretability of TabNet Model

2.3. Alternative Models for Contrast

3. Dataset Description

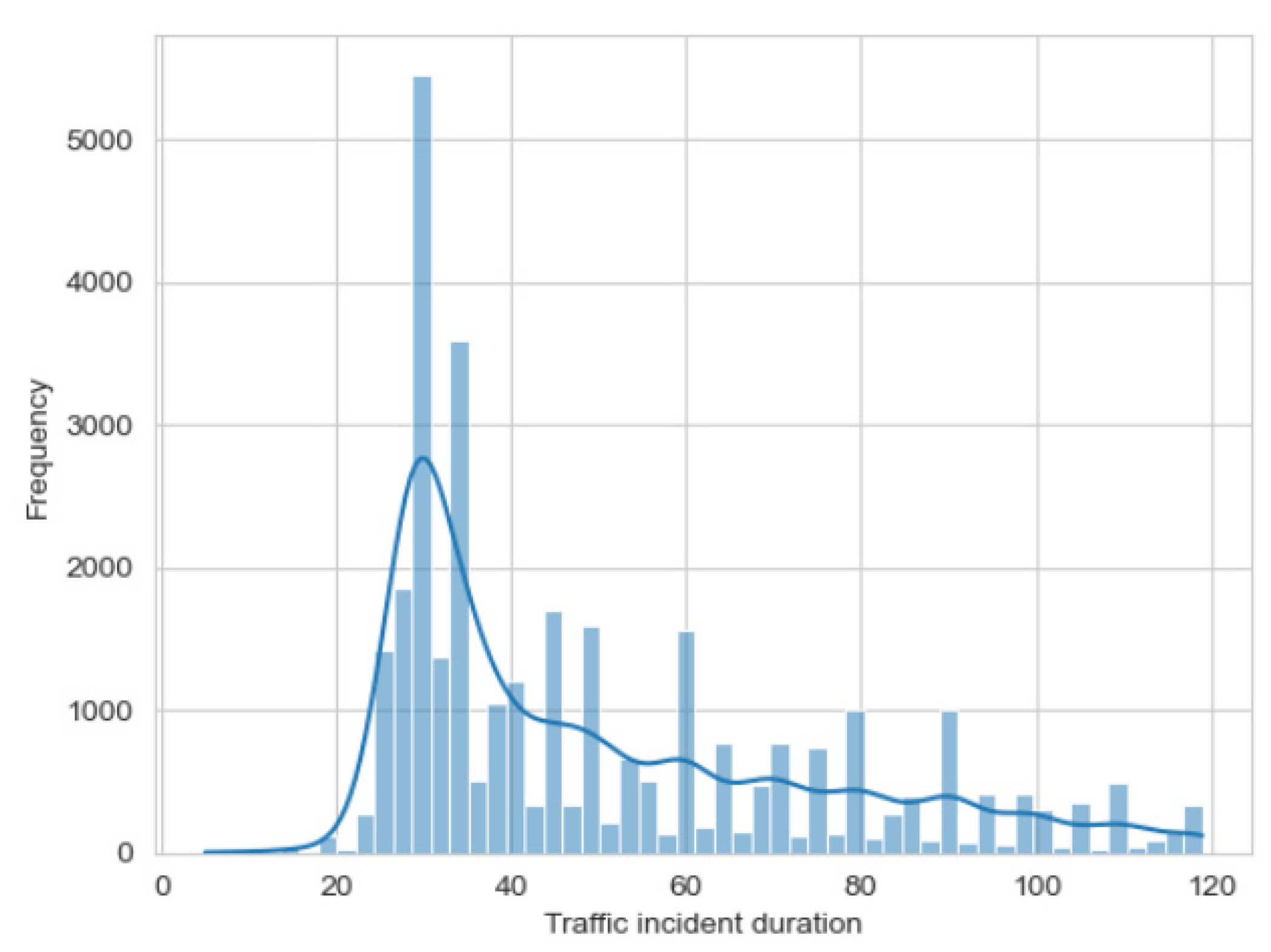

3.1. General Summary

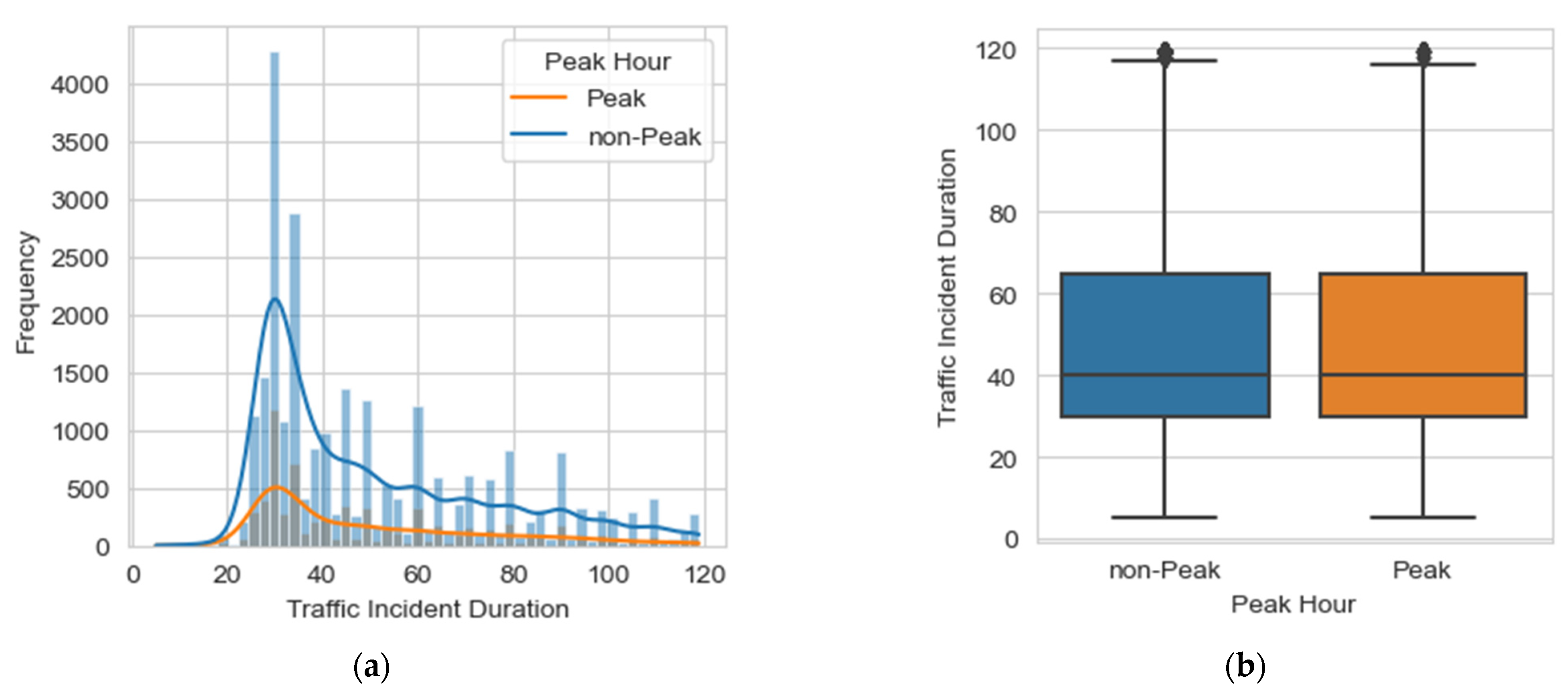

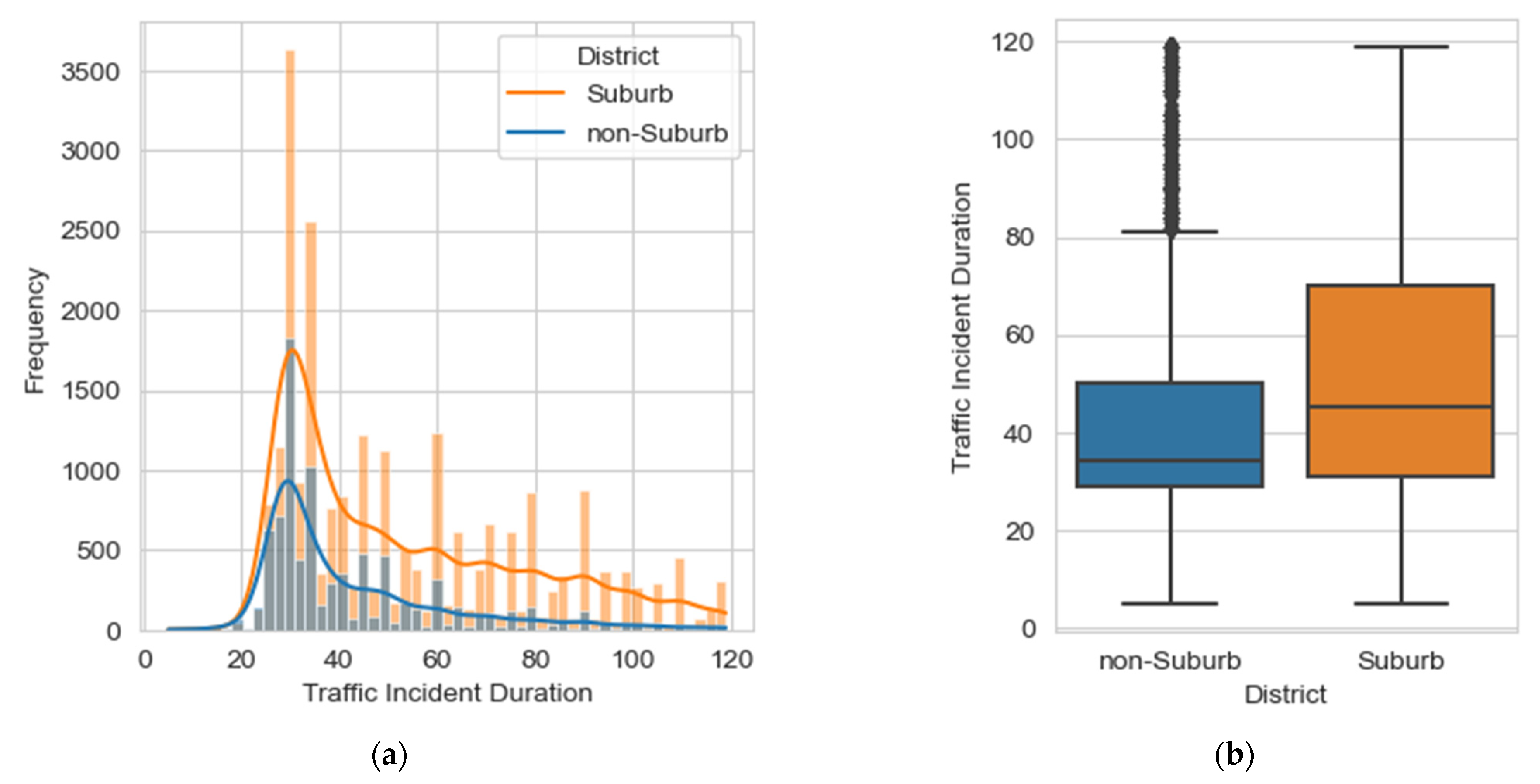

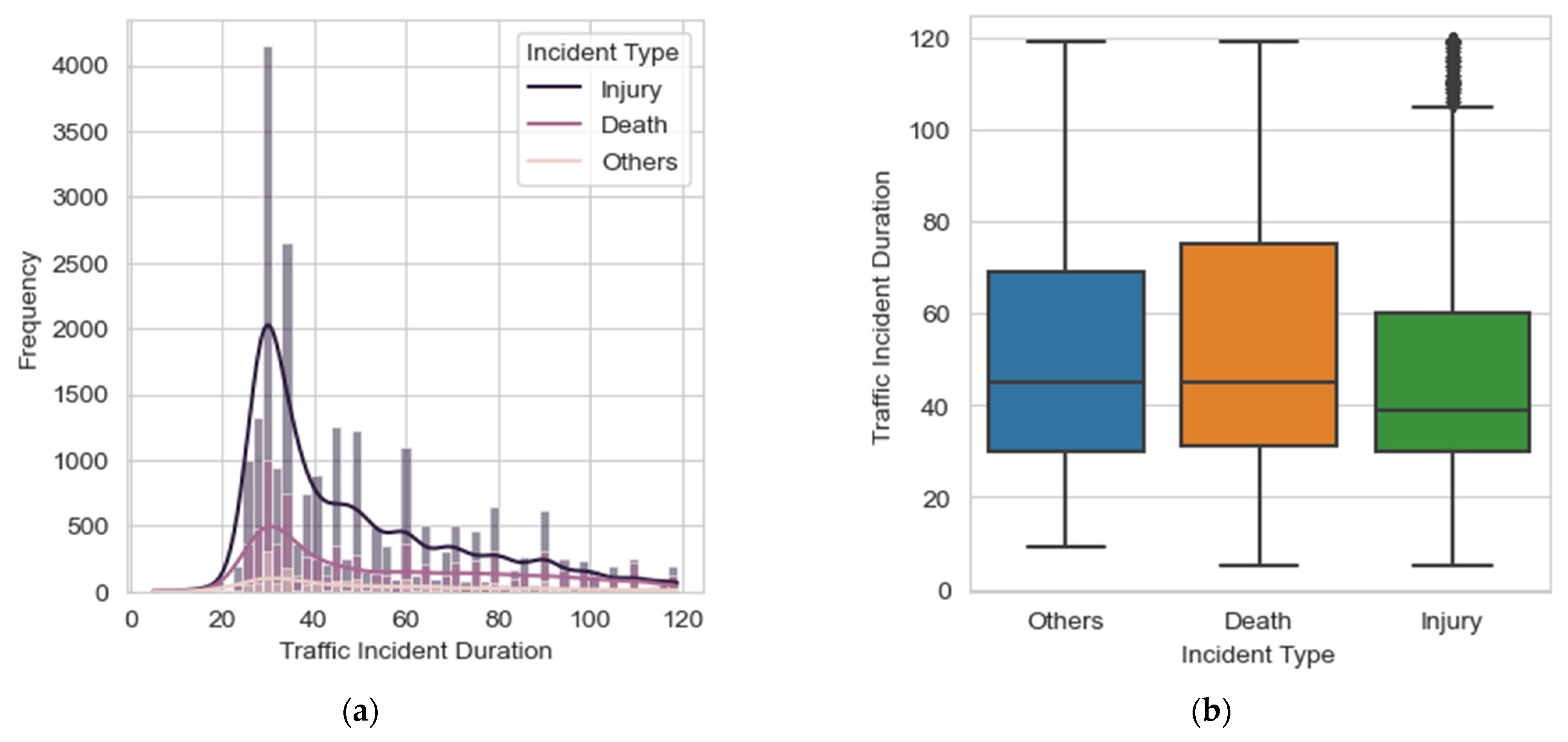

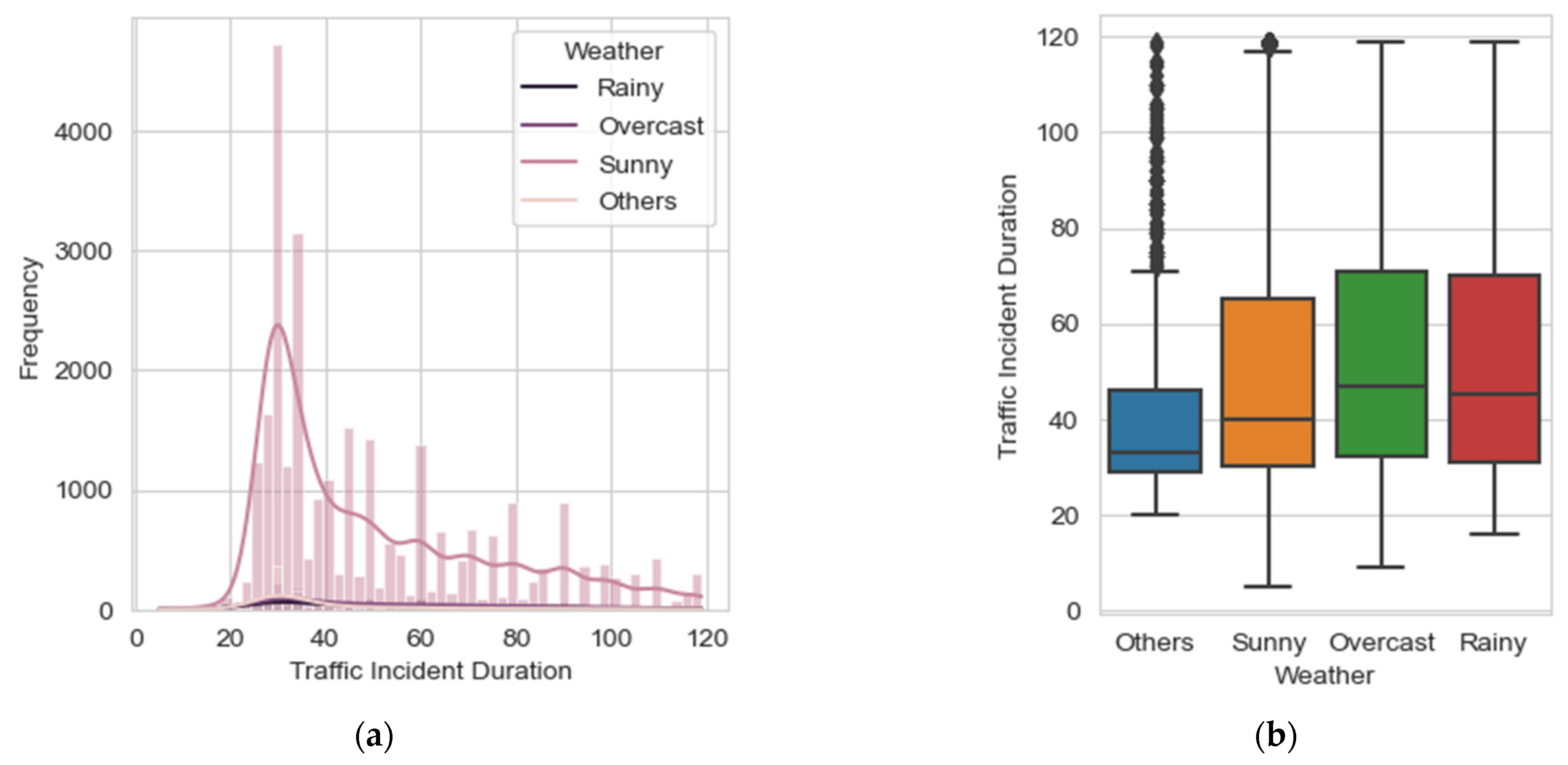

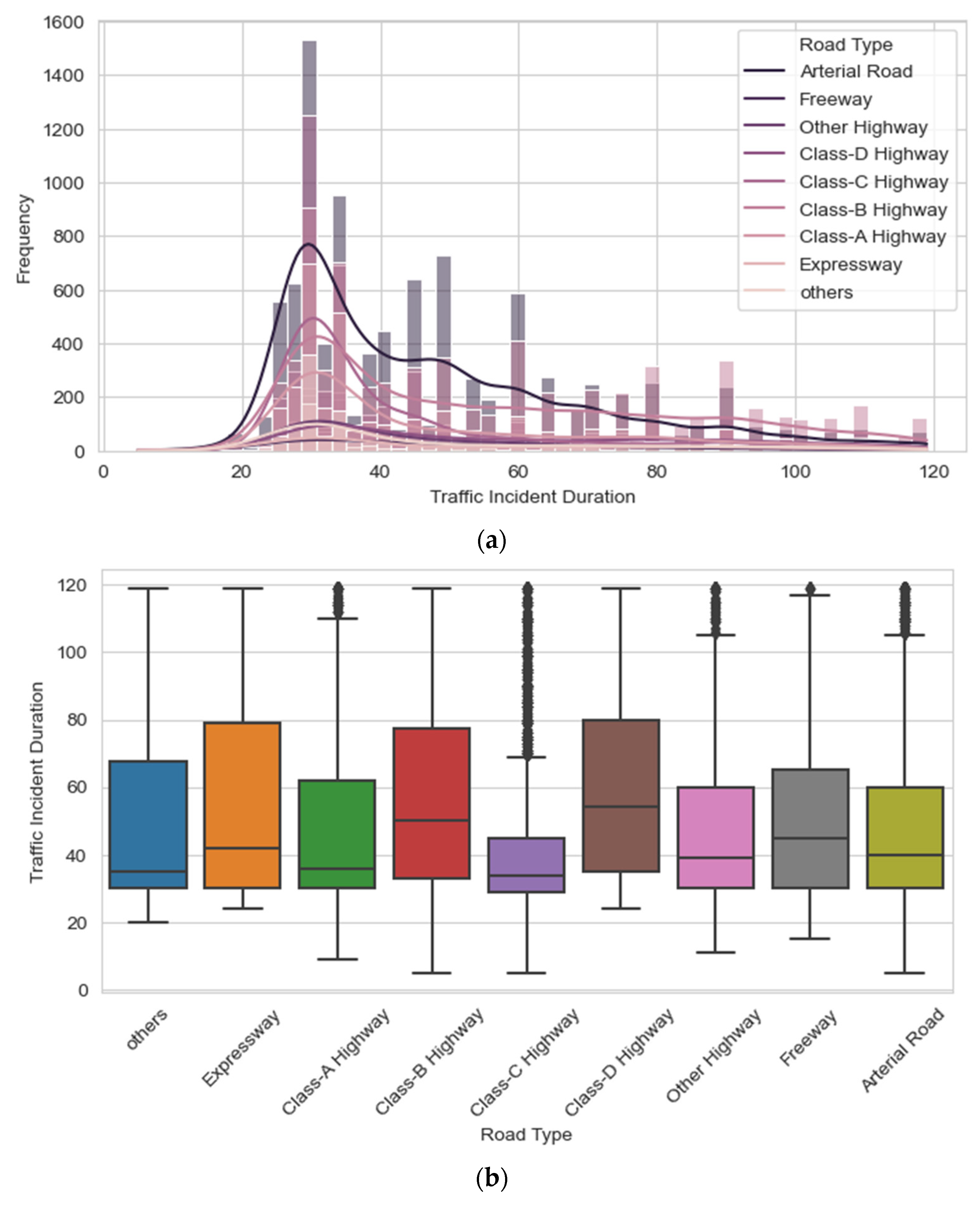

3.2. Characteristics by Different Categories

4. Experiment Results

4.1. Overall and Categorical Results

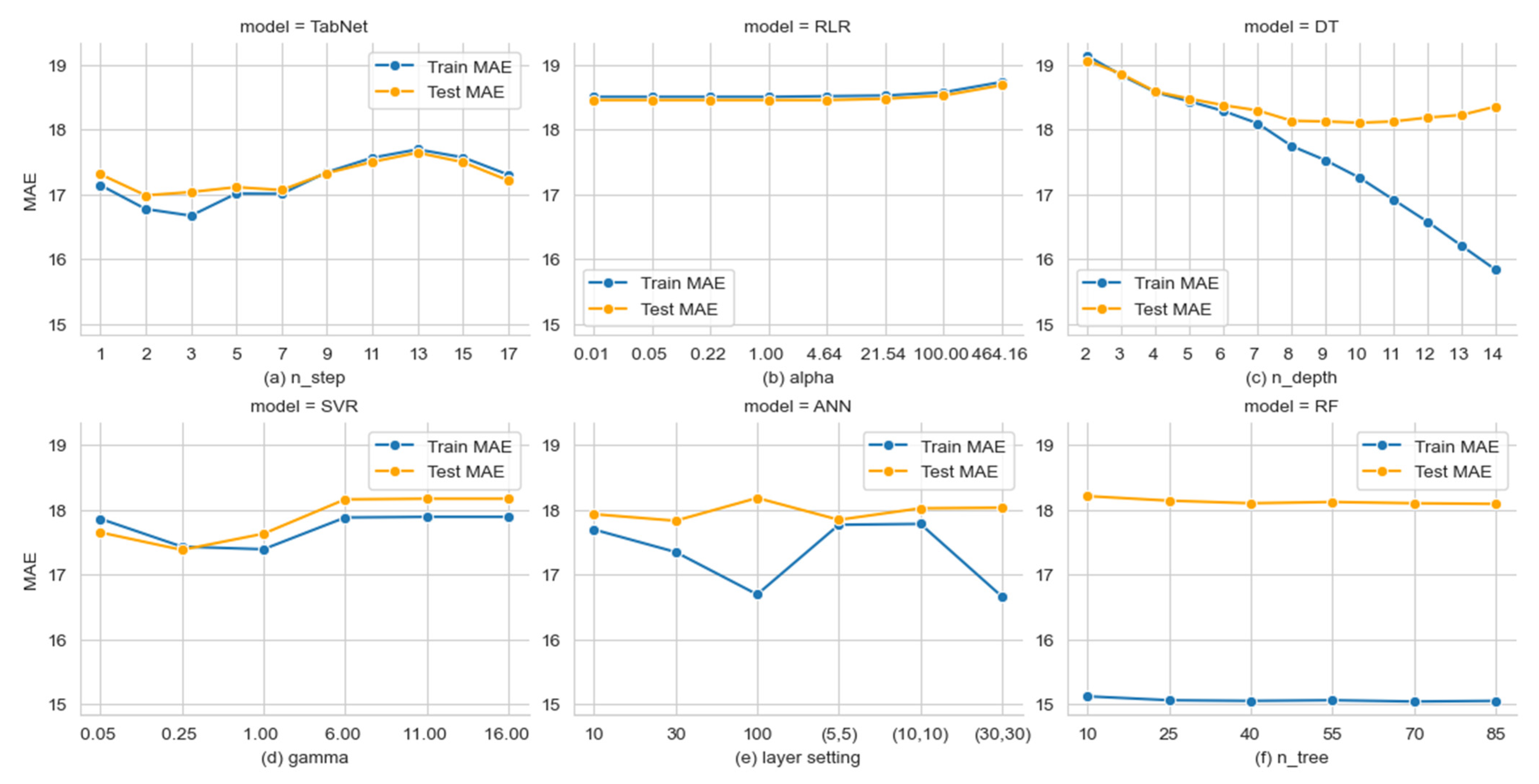

4.2. Impact of Parameter Settings

5. Further Discussion

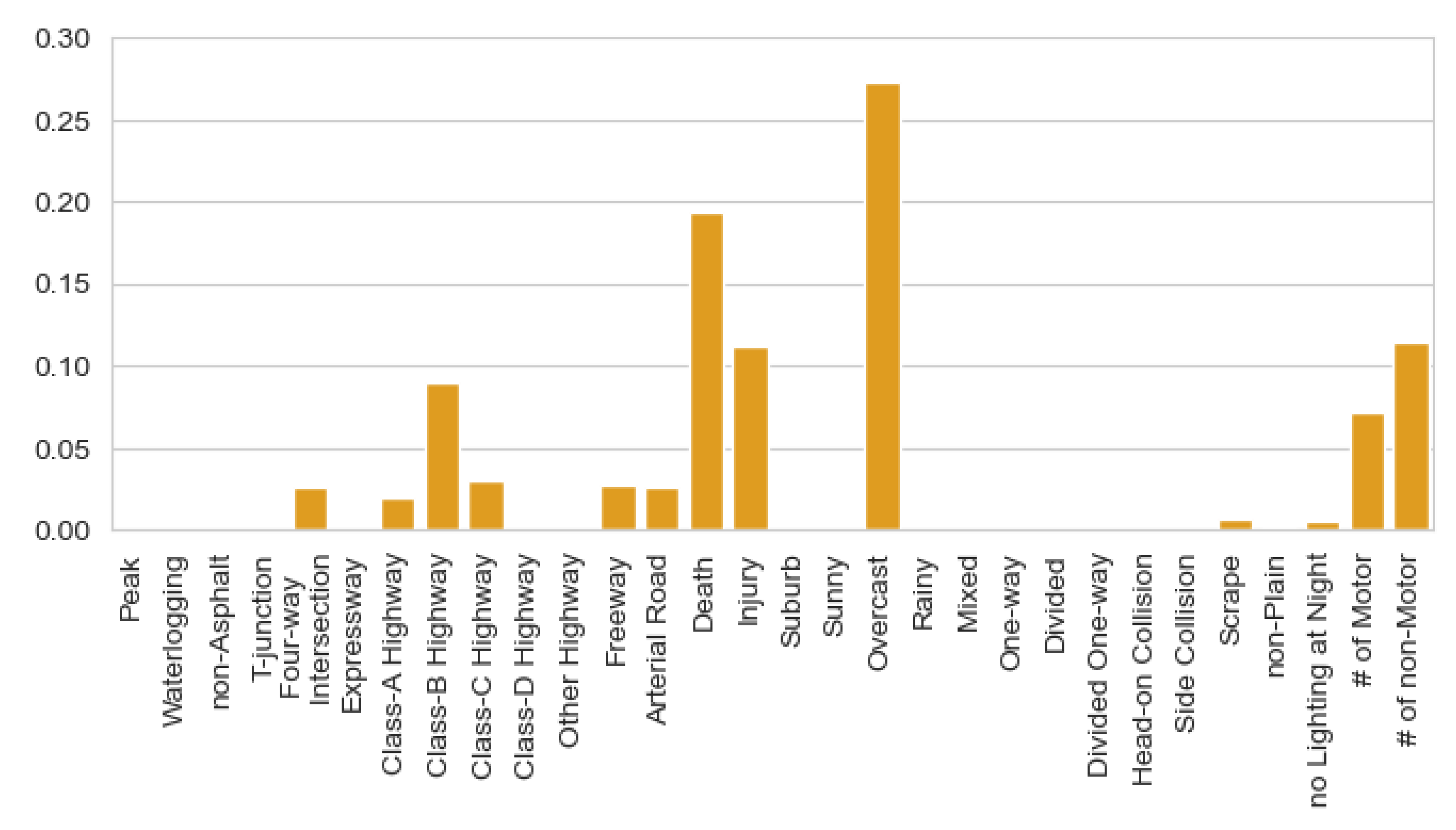

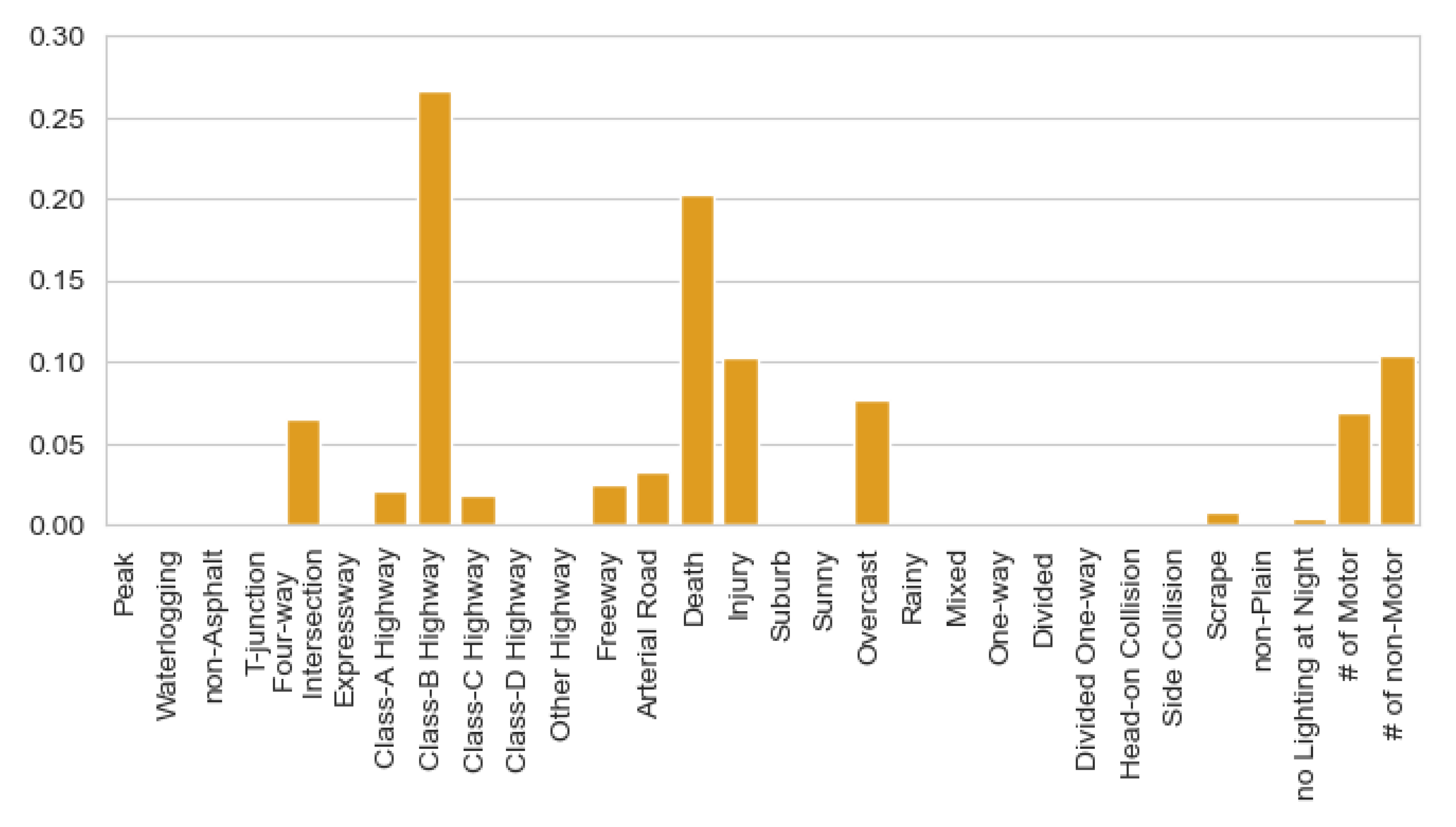

5.1. Numerical Feature Importance

5.2. Stepwise Feature Selection

6. Conclusions and Future Work

6.1. Conclusions

6.2. Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mannering, F.L.; Bhat, C.R. Analytic methods in accident research: Methodological frontier and future directions. Anal. Methods Accid. Res. 2014, 1, 1–22. [Google Scholar] [CrossRef]

- Chung, Y.; Chiou, Y.; Lin, C. Simultaneous equation modeling of freeway accident duration and lanes blocked. Anal. Methods Accid. Res. 2015, 7, 16–28. [Google Scholar] [CrossRef]

- Shang, Q.; Xie, T.; Yu, Y. Prediction of duration of traffic incidents by hybrid deep learning based on multi-source incomplete data. Int. J. Environ. Res. Public Health 2022, 19, 10903. [Google Scholar] [CrossRef] [PubMed]

- Tang, J.; Zheng, L.; Han, C.; Yin, W.; Zhang, Y.; Zou, Y.; Huang, H. Statistical and machine-learning methods for clearance time prediction of road incidents: A methodology review. Anal. Methods Accid. Res. 2020, 27, 100123. [Google Scholar] [CrossRef]

- Hamad, K.; Obaid, L.; Nassif, A.B.; Abu Dabous, S.; Al-Ruzouq, R.; Zeiada, W. Comprehensive evaluation of multiple machine learning classifiers for predicting freeway incident duration. Innov. Infrastruct. Solut. 2023, 8, 177. [Google Scholar] [CrossRef]

- Valenti, G.; Lelli, M.; Cucina, D. A comparative study of models for the incident duration prediction. Eur. Transp. Res. Rev. 2010, 2, 103–111. [Google Scholar] [CrossRef]

- Ke, A.; Gao, Z.; Yu, R.; Wang, M.; Wang, X. A hybrid approach for urban expressway traffic incident duration prediction with Cox regression and random survival forests models. In Proceedings of the 2017 IEEE/ACIS 16th International Conference on Computer and Information Science (ICIS), Wuhan, China, 24–26 May 2017; pp. 113–118. [Google Scholar]

- Wang, S.; Li, R.; Guo, M. Application of nonparametric regression in predicting traffic incident duration. Transport 2018, 33, 22–31. [Google Scholar] [CrossRef]

- Chand, S.; Li, Z.; Alsultan, A.; Dixit, V.V. Comparing and Contrasting the Impacts of Macro-Level Factors on Crash Duration and Frequency. Int. J. Environ. Res. Public Health 2022, 19, 5726. [Google Scholar] [CrossRef]

- Yang, L. Clearance time prediction of traffic accidents: A case study in Shandong, China. Australas. J. Disaster Trauma Stud. 2022, 26, 185–194. [Google Scholar]

- Khattak, A.J.; Liu, J.; Wali, B.; Li, X.; Ng, M. Modeling traffic incident duration using quantile regression. Transp. Res. Rec. 2016, 2554, 139–148. [Google Scholar] [CrossRef]

- Li, R.; Pereira, F.C.; Ben-Akiva, M.E. Competing risks mixture model for traffic incident duration prediction. Accid. Anal. Prev. 2015, 75, 192–201. [Google Scholar] [CrossRef] [PubMed]

- Anastasopoulos, P.C.; Labi, S.; Bhargava, A.; Mannering, F.L. Empirical assessment of the likelihood and duration of highway project time delays. J. Constr. Eng. Manag. 2012, 138, 390–398. [Google Scholar] [CrossRef]

- Lin, L.; Wang, Q.; Sadek, A.W. A combined M5P tree and hazard-based duration model for predicting urban freeway traffic accident durations. Accid. Anal. Prev. 2016, 91, 114–126. [Google Scholar] [CrossRef]

- Araghi, B.N.; Hu, S.; Krishnan, R.; Bell, M.; Ochieng, W. A comparative study of k-NN and hazard-based models for incident duration prediction. In Proceedings of the 17th International IEEE Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 1608–1613. [Google Scholar]

- Pan, D.; Hamdar, S. From Traffic Analysis to Real-Time Management: A Hazard-Based Modeling for Incident Durations Extracted Through Traffic Detector Data Anomaly Detection. Transp. Res. Rec. 2023, 365415635. [Google Scholar] [CrossRef]

- Hojati, A.T.; Ferreira, L.; Washington, S.; Charles, P. Hazard based models for freeway traffic incident duration. Accid. Anal. Prev. 2013, 52, 171–181. [Google Scholar] [CrossRef]

- Mouhous, F.; Aissani, D.; Farhi, N. A stochastic risk model for incident occurrences and duration in road networks. Transp. A Transp. Sci. 2022, 1–33. [Google Scholar] [CrossRef]

- Zou, Y.; Ye, X.; Henrickson, K.; Tang, J.; Wang, Y. Jointly analyzing freeway traffic incident clearance and response time using a copula-based approach. Transp. Res. Part C Emerg. Technol. 2018, 86, 171–182. [Google Scholar] [CrossRef]

- Zou, Y.; Zhang, Y.; Lord, D. Analyzing different functional forms of the varying weight parameter for finite mixture of negative binomial regression models. Anal. Methods Accid. Res. 2014, 1, 39–52. [Google Scholar] [CrossRef]

- Zou, Y.; Henrickson, K.; Lord, D.; Wang, Y.; Xu, K. Application of finite mixture models for analysing freeway incident clearance time. Transp. A Transp. Sci. 2016, 12, 99–115. [Google Scholar] [CrossRef]

- Zou, Y.; Tang, J.; Wu, L.; Henrickson, K.; Wang, Y. Quantile analysis of factors influencing the time taken to clear road traffic incidents. In Proceedings of the Institution of Civil Engineers-Transport; Thomas Telford Ltd.: London, UK, 2017; Volume 170, pp. 296–304. [Google Scholar]

- Fitzenberger, B.; Wilke, R.A. Using quantile regression for duration analysis. Allg. Stat. Arch. 2006, 90, 105–120. [Google Scholar]

- Wu, W.; Chen, S.; Zheng, C. Traffic incident duration prediction based on support vector regression. In Proceedings of the ICCTP 2011: Towards Sustainable Transportation Systems, Nanjing, China, 17–17 September 2011; American Society of Civil Engineers: Reston, VA, USA, 2011; pp. 2412–2421. [Google Scholar]

- Wang, W.; Chen, H.; Bell, M.C. Vehicle breakdown duration modelling. J. Transp. Stat. 2005, 8, 75–84. [Google Scholar]

- Kim, W.; Chang, G. Development of a hybrid prediction model for freeway incident duration: A case study in Maryland. Int. J. Intell. Transp. Syst. Res. 2012, 10, 22–33. [Google Scholar] [CrossRef]

- Shang, Q.; Tan, D.; Gao, S.; Feng, L. A Hybrid Method for Traffic Incident Duration Prediction Using BOA-Optimized Random Forest Combined with Neighborhood Components Analysis. J. Adv. Transp. 2019, 2019, 4202735. [Google Scholar] [CrossRef]

- Zhao, H.; Gunardi, W.; Liu, Y.; Kiew, C.; Teng, T.; Yang, X.B. Prediction of traffic incident duration using clustering-based ensemble learning method. J. Transp. Eng. Part A Syst. 2022, 148, 4022044. [Google Scholar] [CrossRef]

- Song, Y.; Ying, L.U. Decision tree methods: Applications for classification and prediction. Shanghai Arch. Psychiatry 2015, 27, 130. [Google Scholar] [PubMed]

- Grigorev, A.; Mihaita, A.; Lee, S.; Chen, F. Incident duration prediction using a bi-level machine learning framework with outlier removal and intra–extra joint optimisation. Transp. Res. Part C Emerg. Technol. 2022, 141, 103721. [Google Scholar] [CrossRef]

- Ghosh, B.; Dauwels, J. Comparison of different Bayesian methods for estimating error bars with incident duration prediction. J. Intell. Transp. Syst. 2022, 26, 420–431. [Google Scholar] [CrossRef]

- Park, H.; Haghani, A.; Zhang, X. Interpretation of Bayesian neural networks for predicting the duration of detected incidents. J. Intell. Transp. Syst. 2016, 20, 385–400. [Google Scholar] [CrossRef]

- Boyles, S.; Fajardo, D.; Waller, S.T. A naive Bayesian classifier for incident duration prediction. In Proceedings of the 86th Annual Meeting of the Transportation Research Board, Washington, DC, USA, 1 January 2007. [Google Scholar]

- Zhao, Y.; Deng, W. Prediction in traffic accident duration based on heterogeneous ensemble learning. Appl. Artif. Intell. 2022, 36, 2018643. [Google Scholar] [CrossRef]

- Arik, S.Ö.; Pfister, T. Tabnet: Attentive interpretable tabular learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual Event, 2–9 February 2021; pp. 6679–6687. [Google Scholar]

- Yan, J.; Xu, T.; Yu, Y.; Xu, H. Rainfall forecast model based on the tabnet model. Water 2021, 13, 1272. [Google Scholar] [CrossRef]

- Sun, C.; Li, S.; Cao, D.; Wang, F.; Khajepour, A. Tabular Learning-Based Traffic Event Prediction for Intelligent Social Transportation System. IEEE Trans. Comput. Soc. Syst. 2022, 10, 1199–1210. [Google Scholar] [CrossRef]

- Da Silva, I.N.; Spatti, D.H.; Flauzino, R.A.; Liboni, L.H.B.; Dos Reis Alves, S.F. Artificial Neural Networks; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Hamadneh, N.N.; Tahir, M.; Khan, W.A. Using artificial neural network with prey predator algorithm for prediction of the COVID-19: The case of Brazil and Mexico. Mathematics 2021, 9, 180. [Google Scholar] [CrossRef]

- Kesavaraj, G.; Sukumaran, S. A study on classification techniques in data mining. In Proceedings of the 2013 Fourth International Conference on Computing, Communications and Networking Technologies (ICCCNT), Tiruchengode, India, 4–6 July 2013; pp. 1–7. [Google Scholar]

- Iranitalab, A.; Khattak, A. Comparison of four statistical and machine learning methods for crash severity prediction. Accid. Anal. Prev. 2017, 108, 27–36. [Google Scholar] [CrossRef] [PubMed]

| Field | Variable Notation | Variable Type | |

|---|---|---|---|

| Peak Hour | Peak | binary | |

| Road Conditions | Waterlogging | binary | |

| Road Surface Structure | Non-asphalt | binary | |

| Intersection Type | T-junction | binary | |

| Four-way Intersection | binary | ||

| Road Type | Expressway | binary | |

| Class-A highway | binary | ||

| Class-B highway | binary | ||

| Class-C highway | binary | ||

| Class-D highway | binary | ||

| Other highway | binary | ||

| Freeway | binary | ||

| Arterial road | binary | ||

| Incident Type | Death | binary | |

| Injury | binary | ||

| District | Suburb | binary | |

| Weather | Sunny | binary | |

| Overcast | binary | ||

| Rainy | binary | ||

| Lane Configuration | Mixed | binary | |

| One-way | binary | ||

| Divided | binary | ||

| Divided one-way | binary | ||

| Collision Type | Head-on collision | binary | |

| Side collision | binary | ||

| Scrape | binary | ||

| Topography | Non-plain | binary | |

| Lighting Conditions | No lighting at night | binary | |

| Number of Motor Vehicles | / | numerical | |

| Number of non-Motor Vehicles | / | numerical | |

| Traffic Incident duration | / | numerical |

| Category | Model | MAE | RMSE | MAPE |

|---|---|---|---|---|

| Whole Dataset | ANN | 17.83 | 22.52 | 36.91% |

| DT | 18.11 | 22.88 | 37.38% | |

| RF | 18.09 | 23.29 | 37.08% | |

| RLR | 18.46 | 22.80 | 37.92% | |

| SVR | 17.38 | 24.26 | 34.55% | |

| TabNet | 17.04 | 22.01 | 33.60% |

| Category | Model | MAE | RMSE | MAPE | Category | Model | MAE | RMSE | MAPE |

|---|---|---|---|---|---|---|---|---|---|

| Suburb | ANN | 19.18 | 23.70 | 38.61% | Death | ANN | 21.26 | 25.65 | 42.05% |

| DT | 19.53 | 24.12 | 39.33% | DT | 21.59 | 26.19 | 42.31% | ||

| RF | 19.48 | 24.56 | 38.85% | RF | 21.55 | 26.87 | 42.14% | ||

| RLR | 19.71 | 24.04 | 39.83% | RLR | 21.98 | 26.15 | 43.88% | ||

| SVR | 18.86 | 25.76 | 35.50% | SVR | 21.46 | 28.59 | 38.33% | ||

| TabNet | 18.35 | 23.21 | 34.11% | TabNet | 21.52 | 25.94 | 40.45% | ||

| non-Suburb | ANN | 14.05 | 18.82 | 32.16% | Injury | ANN | 16.74 | 21.42 | 35.42% |

| DT | 14.13 | 19.03 | 31.92% | DT | 17.03 | 21.71 | 36.13% | ||

| RF | 14.22 | 19.34 | 32.15% | RF | 16.95 | 21.97 | 35.56% | ||

| RLR | 14.24 | 18.90 | 32.58% | RLR | 17.07 | 21.62 | 36.13% | ||

| SVR | 13.23 | 19.47 | 31.51% | SVR | 15.95 | 22.57 | 33.10% | ||

| TabNet | 13.37 | 18.22 | 32.74% | TabNet | 15.83 | 20.82 | 32.03% | ||

| Peak | ANN | 17.31 | 22.02 | 37.90% | Class-B Highway | ANN | 22.02 | 25.99 | 45.56% |

| DT | 17.59 | 22.28 | 38.48% | DT | 22.29 | 26.17 | 46.60% | ||

| RF | 17.84 | 22.96 | 38.86% | RF | 22.18 | 26.59 | 45.36% | ||

| RLR | 17.56 | 21.91 | 38.61% | RLR | 22.47 | 26.28 | 46.32% | ||

| SVR | 16.89 | 23.13 | 35.96% | SVR | 21.47 | 27.59 | 41.21% | ||

| TabNet | 16.41 | 21.31 | 35.26% | TabNet | 21.17 | 25.40 | 40.83% | ||

| Arterial Road | ANN | 15.13 | 19.71 | 31.55% | Expressway | ANN | 21.82 | 25.87 | 43.33% |

| DT | 15.09 | 19.70 | 31.17% | DT | 23.35 | 26.76 | 51.58% | ||

| RF | 15.40 | 20.34 | 31.62% | RF | 21.53 | 25.99 | 42.91% | ||

| RLR | 15.30 | 19.81 | 31.88% | RLR | 22.85 | 26.73 | 46.90% | ||

| SVR | 14.93 | 21.30 | 31.32% | SVR | 21.70 | 28.95 | 39.06% | ||

| TabNet | 14.34 | 19.09 | 30.56% | TabNet | 21.50 | 25.92 | 40.48% |

| Model | Parameter | Value |

|---|---|---|

| TabNet | n_step | 1, 2, 3, 5, 7, 9, 11, 13, 15, and 17 |

| RLR | alpha | 0.01, 0.05, 0.22, 1.00, 4.46, 21.54, 100, 464.16 |

| (in log scale) | ||

| DT | n_depth | 5~14 |

| SVR | gamma | 0.05, 0.25, 1, 6, 11, and 16 |

| ANN | layer setting | 10, 30, 100, (5,5), (10,10), and (30,30) |

| RF | n_tree | 10, 25, 40, 55, 70, and 85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Li, Y. A Novel Explanatory Tabular Neural Network to Predicting Traffic Incident Duration Using Traffic Safety Big Data. Mathematics 2023, 11, 2915. https://doi.org/10.3390/math11132915

Li H, Li Y. A Novel Explanatory Tabular Neural Network to Predicting Traffic Incident Duration Using Traffic Safety Big Data. Mathematics. 2023; 11(13):2915. https://doi.org/10.3390/math11132915

Chicago/Turabian StyleLi, Huiping, and Yunxuan Li. 2023. "A Novel Explanatory Tabular Neural Network to Predicting Traffic Incident Duration Using Traffic Safety Big Data" Mathematics 11, no. 13: 2915. https://doi.org/10.3390/math11132915

APA StyleLi, H., & Li, Y. (2023). A Novel Explanatory Tabular Neural Network to Predicting Traffic Incident Duration Using Traffic Safety Big Data. Mathematics, 11(13), 2915. https://doi.org/10.3390/math11132915